Building an enterprise-level AI module for travel insurance claims is complex. Claims processing requires handling diverse data formats, interpreting detailed information, and applying judgment beyond simple automation.

When developing Lea’s AI claims module, we faced challenges like outdated legacy systems, inconsistent data formats, and evolving fraud tactics. These hurdles demanded not only technical skill but also adaptability and problem-solving.

In this article series, we’ll share the in-depth journey of building Lea’s AI eligibility assessment module: the challenges, key insights, and technical solutions we applied to create an enterprise-ready system for travel insurance claims processing.

Key Learnings:

When we started developing our solution, the AI landscape was rapidly evolving, creating both opportunities and challenges for model development and deployment. Model capabilities were less advanced—GPT models, for example, could handle only 8k tokens with limited functionality. This rapid evolution in AI technology complicated long-term architectural decisions.

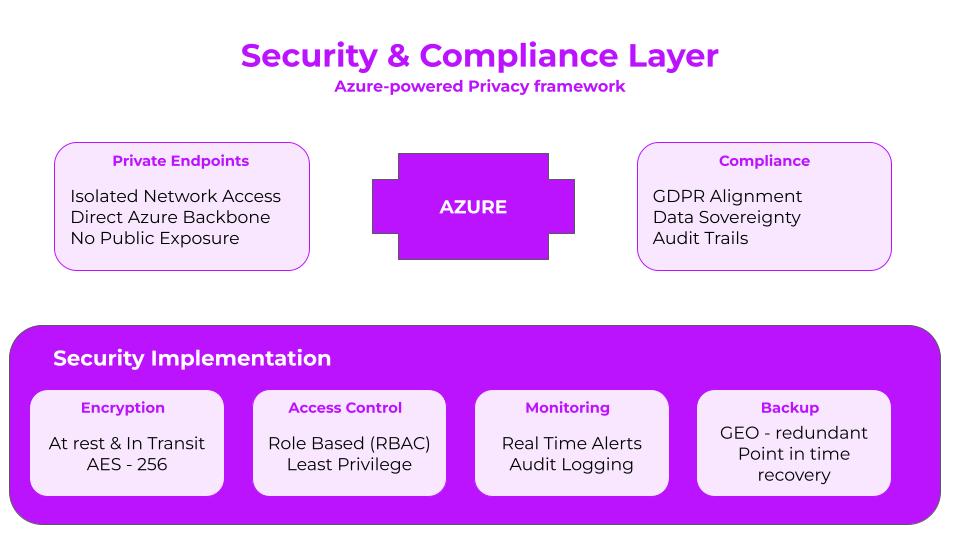

In this dynamic environment, our priority was to deploy the best available model on a platform that offered both performance and a strong privacy framework. Without a viable local model, we needed an infrastructure providing robust model capabilities while respecting data privacy—a key requirement in the insurance industry.

Why Microsoft Azure?

Access to Leading AI Models with Privacy Assurance

We chose Azure largely for its strategic partnership with OpenAI, giving us access to top-tier AI models with privacy at the forefront. Though we initially explored OpenAI’s offerings via Azure, we ultimately moved to private endpoints, underscoring our commitment to stringent data privacy and control.

Trusted Brand for the Insurance Sector

Azure’s brand image aligns well with the high data security standards expected by our insurance clients. Microsoft’s reputation and compliance standards resonate within the financial and insurance sectors, providing reassurance for data-sensitive claims processing.

Future-Ready Flexibility in a Rapidly Changing AI Environment

With AI model capabilities advancing constantly, Azure offers the adaptability to stay at the forefront of innovation. Its Infrastructure as Code (IaC) capabilities and cloud-agnostic approach allow us to pivot infrastructure as new advancements emerge, minimizing disruption.

In short, Azure’s advanced AI capabilities, strong privacy standards, and industry-trusted brand make it ideal for navigating the uncertainties of the AI landscape and meeting the rigorous demands of the insurance industry.

Building a Resilient Infrastructure for AI-Driven Claims Processing

In AI-driven claims processing, creating a resilient, flexible infrastructure that efficiently manages vendor and component dependencies is essential for scalability, cost control, and regulatory compliance. Ancileo’s system prioritizes flexibility, security, and operational continuity within a fast-evolving industry.

Vendor dependency in AI-driven solutions offers strategic advantages but also requires careful management. As AI capabilities evolve, reliance on third-party infrastructure and tools demands a proactive approach. Ancileo’s infrastructure leverages best-in-class technology without compromising flexibility or scalability, ensuring vendor alignment with long-term goals in this dynamic environment.

Strategic Choice of Azure for AI and Compliance

Ancileo’s infrastructure, hosted on Microsoft Azure, benefits from Azure’s compliance framework, security standards, and integration with OpenAI. This makes it ideal for managing data-sensitive claims processing in the travel insurance industry.

Cloud Flexibility with Infrastructure as Code (IaC) for Client-Centric Adaptability

Using Infrastructure as Code (IaC) with Terraform and Helm, Ancileo’s system brings adaptability and resilience, allowing migration across cloud providers based on client preferences or regulatory needs. Ancileo’s cloud-agnostic design avoids vendor lock-in through abstracted infrastructure configurations.

Containerized Deployment for Cross-Platform Compatibility and Scalability

Containerizing the AI module with Docker and orchestrating deployment via Kubernetes achieves a portable environment adaptable across platforms without reconfiguration.

Ancileo integrates open-source ML libraries and frameworks, including Celery, FastAPI, Uvicorn, Docker, Kubernetes, PyTorch, scikit-learn, spaCy, Tesseract, LangGraph, Pillow, and PyMuPDF, to minimize vendor dependency and increase adaptability.

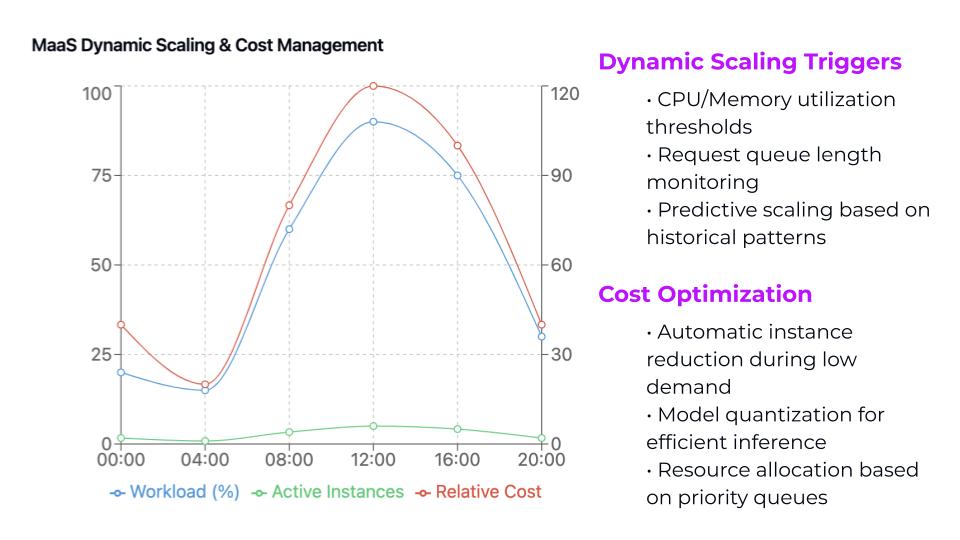

Optimized Resource Allocation and Cost Management with Model-as-a-Service (MaaS)

Ancileo’s system uses Model-as-a-Service (MaaS) for selective AI processing, balancing cloud costs with processing power.

Ancileo’s system continuously monitors vendor and component performance, making proactive adjustments to stay aligned with client needs and technological advancements.

Real-World Application

Ancileo’s adaptable, cloud-agnostic architecture seamlessly manages sudden demand shifts. During major travel disruptions, such as natural disasters, Kubernetes-managed containerization rapidly scales horizontally, deploying new instances to manage increased claim volumes.

Using IaC, Ancileo can adjust its infrastructure for client-specific needs or regulatory mandates. For example, if a new privacy regulation requires data localization, the system can adjust across cloud regions without downtime.

Combining vendor partnerships, containerization, IaC, open-source flexibility, and optimized AI processing through MaaS, Ancileo’s infrastructure offers a scalable, cost-efficient solution for travel insurers. The system adapts to the industry’s needs, ensuring robust operational continuity, security, and flexibility.